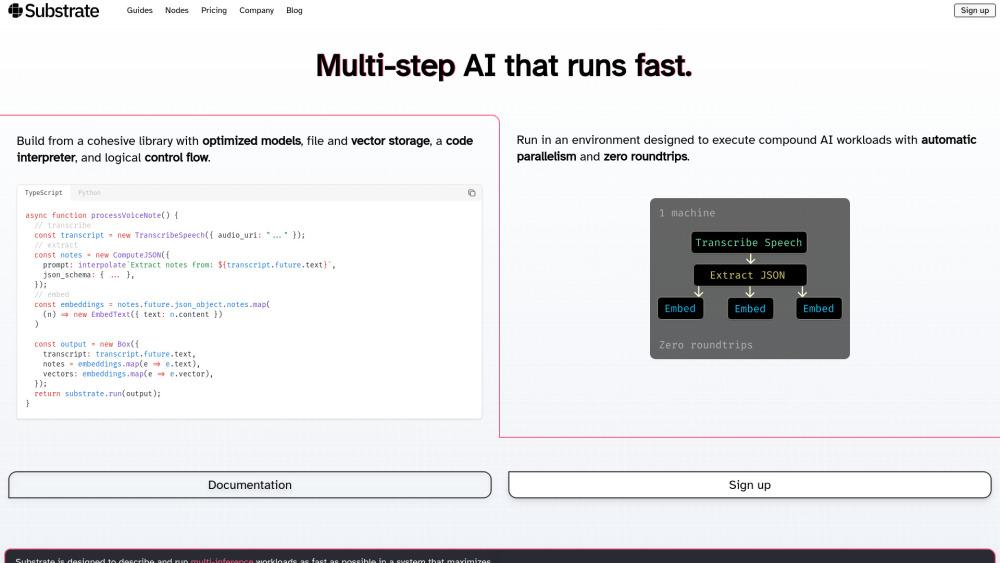

Substrate - AI Inference Platform for Maximum Parallelism

Product Information

Key Features of Substrate - AI Inference Platform for Maximum Parallelism

AI inference platform for high-performance, multi-inference workloads with maximum parallelism.

Real-time Inference Processing

Process multiple AI workloads simultaneously with minimal latency and maximum throughput.

Advanced Data Locality

Optimize data placement and access to minimize data transfer and maximize performance.

Scalable Architecture

Seamlessly scale AI workloads to meet evolving demands and ensure maximum performance.

Multi-Inference Support

Run multiple AI inference workloads concurrently to maximize resource utilization and efficiency.

High-Performance Computing

Leverage optimized hardware acceleration to achieve uncompromised AI performance and speed.

Use Cases of Substrate - AI Inference Platform for Maximum Parallelism

Deploy AI models for computer vision and speech recognition applications.

Accelerate natural language processing and recommendation engines with multi-inference workloads.

Use Substrate for edge AI, autonomous vehicles, and robotics applications requiring real-time inference processing.

Pros and Cons of Substrate - AI Inference Platform for Maximum Parallelism

Pros

- Maximum parallelism for concurrent AI inference workloads.

- High-performance computing for real-time inference processing and analytics.

Cons

- Steep learning curve due to complex architecture and customized configurations.

- Resource-intensive, requiring significant computational power and memory.

How to Use Substrate - AI Inference Platform for Maximum Parallelism

- 1

Integrate Substrate with your preferred AI framework and model.

- 2

Configure and optimize the Substrate platform for specific AI workloads.

- 3

Deploy and manage AI inference workloads with real-time monitoring and analytics.