Wear-Any-Way: Manipulable Virtual Try-on via Sparse Correspondence Alignment

Product Information

Key Features of Wear-Any-Way: Manipulable Virtual Try-on via Sparse Correspondence Alignment

Wear-Any-Way supports single/multiple garment try-on, model-to-model settings, and manipulable virtual try-on via sparse correspondence alignment.

Manipulable Virtual Try-on

Wear-Any-Way enables users to precisely manipulate the wearing style through point-based control.

Single/Multiple Garment Try-on

Wear-Any-Way supports various input types, including shop-to-model, model-to-model, shop-to-street, model-to-street, street-to-street, etc.

Model-to-Model Settings

Wear-Any-Way supports model-to-model settings in complicated scenarios.

Sparse Correspondence Alignment

Wear-Any-Way uses sparse correspondence alignment to achieve state-of-the-art performance for standard virtual try-on.

Customizable Generation

Wear-Any-Way enables users to assign arbitrary numbers of control points on the garment and person image to customize the generation.

Use Cases of Wear-Any-Way: Manipulable Virtual Try-on via Sparse Correspondence Alignment

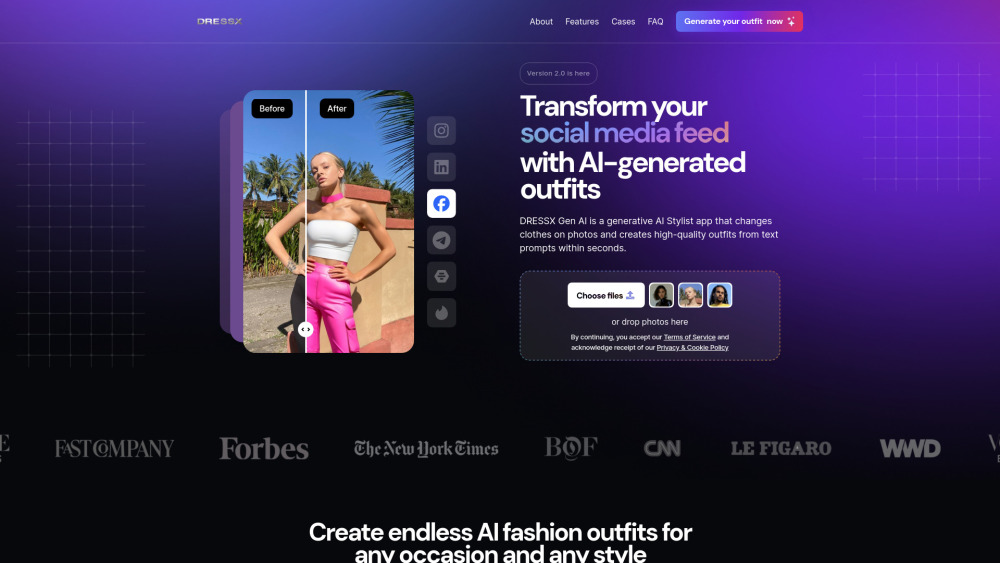

Virtual try-on for fashion e-commerce platforms

Personalized fashion recommendations

Fashion design and prototyping

Virtual fashion shows and events

Fashion education and training

Pros and Cons of Wear-Any-Way: Manipulable Virtual Try-on via Sparse Correspondence Alignment

Pros

- State-of-the-art performance for standard virtual try-on

- Novel interaction form for customizing the wearing style

- Supports single/multiple garment try-on and model-to-model settings

- Enables more liberated and flexible expressions of the attires

- Holds profound implications in the fashion industry

Cons

- May require additional computational resources

- May require expertise in computer vision and machine learning

- May have limitations in handling complex garments or poses

How to Use Wear-Any-Way: Manipulable Virtual Try-on via Sparse Correspondence Alignment

- 1

Assign control points on the garment and person image to customize the generation

- 2

Use the sparse correspondence alignment to achieve state-of-the-art performance for standard virtual try-on

- 3

Experiment with different input types and settings to achieve the desired results

- 4

Use the customizable generation to create personalized fashion recommendations

- 5

Integrate Wear-Any-Way into fashion e-commerce platforms or virtual fashion shows