Helicone - LLM Observability for Developers

Product Information

Key Features of Helicone - LLM Observability for Developers

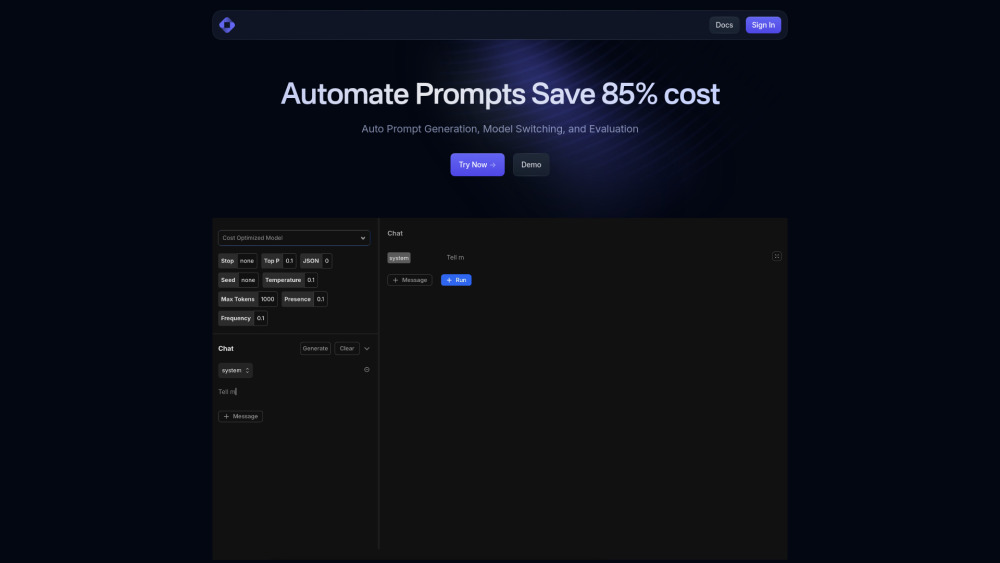

Helicone offers a range of features, including request filtering, instant analytics, prompt management, and 99.99% uptime. It also provides scalability and reliability, with sub-millisecond latency and risk-free experimentation.

Request Filtering

Filter, segment, and analyze your requests with Helicone's powerful filtering capabilities.

Instant Analytics

Get detailed metrics such as latency, cost, and time to first token with Helicone's instant analytics.

Prompt Management

Access features such as prompt versioning, prompt testing, and prompt templates with Helicone's prompt management.

99.99% Uptime

Helicone leverages Cloudflare Workers to maintain low latency and high reliability, ensuring 99.99% uptime.

Scalability and Reliability

Helicone is 100x more scalable than competitors, offering read and write abilities for millions of logs.

Use Cases of Helicone - LLM Observability for Developers

Improve your LLM's performance with Helicone's request filtering and instant analytics.

Use Helicone's prompt management to access features such as prompt versioning and prompt testing.

Deploy Helicone on-prem for maximum security and scalability.

Use Helicone's open-source platform to contribute to the community and improve the platform.

Pros and Cons of Helicone - LLM Observability for Developers

Pros

- Helicone is open-source and values transparency and community involvement.

- Helicone provides essential tools for developers to improve their LLMs.

- Helicone offers scalability and reliability, with sub-millisecond latency and risk-free experimentation.

Cons

- Helicone may have a learning curve for developers who are new to LLMs and observability platforms.

- Helicone may require additional setup and configuration for on-prem deployment.

How to Use Helicone - LLM Observability for Developers

- 1

Sign up for a demo to try Helicone's features and see how it can improve your LLM.

- 2

Deploy Helicone on-prem for maximum security and scalability.

- 3

Contribute to the Helicone community by joining the Discord channel and submitting issues on GitHub.

- 4

Use Helicone's open-source platform to improve your LLM's performance and reliability.