Groq - Fast AI Inference for Openly-Available Models

Product Information

Key Features of Groq - Fast AI Inference for Openly-Available Models

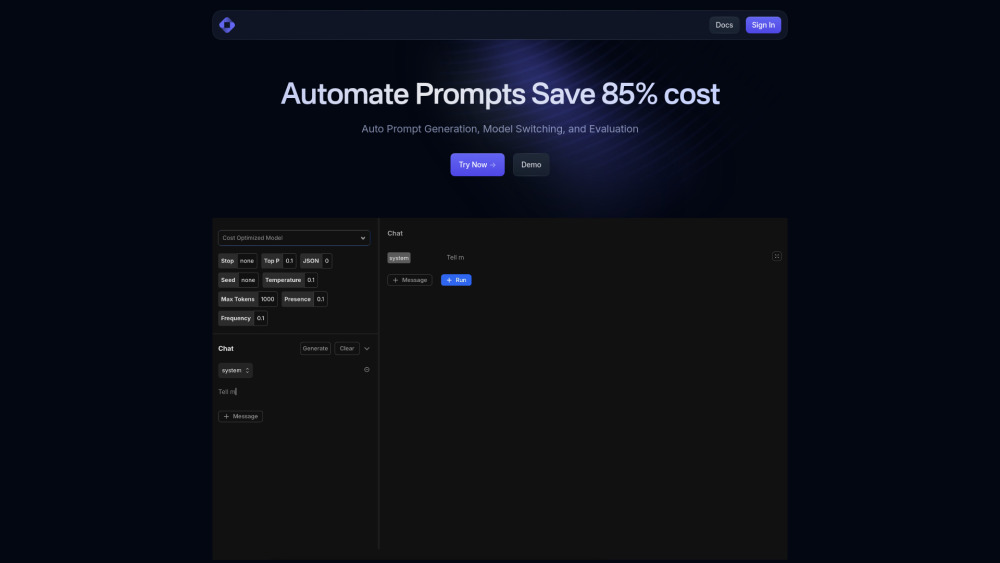

Ultra-low-latency AI inference, compatibility with OpenAI endpoints, and support for openly-available models like Llama 3.1.

Ultra-Low-Latency Inference

Groq's AI inference engine provides fast and reliable performance, with latency as low as 1ms.

OpenAI Endpoint Compatibility

Groq's API is compatible with OpenAI endpoints, making it easy to integrate with existing workflows and tools.

Support for Openly-Available Models

Groq supports a range of openly-available models, including Llama 3.1 and other models from leading AI research organizations.

Use Cases of Groq - Fast AI Inference for Openly-Available Models

Developing AI-powered applications with fast and reliable inference

Researching and testing AI models with ultra-low-latency performance

Integrating AI inference with existing workflows and tools using OpenAI endpoints

Pros and Cons of Groq - Fast AI Inference for Openly-Available Models

Pros

- Fast and reliable AI inference with ultra-low-latency performance

- Compatibility with OpenAI endpoints for easy integration

- Support for openly-available models from leading AI research organizations

Cons

- Limited support for proprietary models

- Requires a paid plan for enterprise customers

- May require additional setup and configuration for some use cases

How to Use Groq - Fast AI Inference for Openly-Available Models

- 1

Sign up for a free account on the Groq website

- 2

Explore the Groq API and developer tools

- 3

Integrate Groq with your existing workflows and tools using OpenAI endpoints